Show You is an interactive installation in the form of a darkroom, where one gets to virtually “develop” one of their own Instagram photos in a developer tray.

Here is video documentation of Show You.

“Show You” – Darkroom Simulation with Instagram – Thesis Documentation from Zena Koo on Vimeo.

The amount the user moves the piece of paper in the clear-bottomed tray controls the rate at which the randomly chosen personal Instagram photo develops. I chose to randomly select the photographs that appear because I was hoping to mimic that magic moment in the real darkroom developing process when the photographer first sees their image start to appear on print in the tray — that moment of delight and surprise was something I was very keen to evoke.

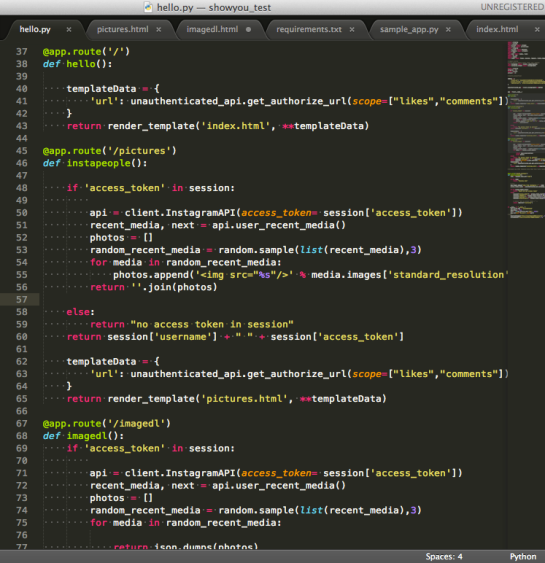

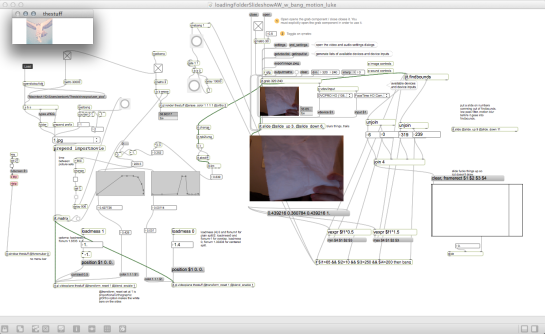

How? I am using the Instagram API with the Python wrapper to access the user’s images through the OAuth process. From there I am sourcing two randomly chosen images from their recent feed (of the last [up to] 300 photos). I am also using MAX/Jitter to look for the two photos I just sourced, and a webcam to look for the photo in the water to motion track its movement and luminosity variance. This part controls the rate at which the image appears to develop (fade in, fade out). The same image that has just developed appears in a hanging frame inside the booth for a brief moment at the peak of its development to illustrate a few points. The length of time the image appears in the frame is short to comment on how our mobile phone photos are just that these days — photos in your mobile phone that we take quickly and easily and many times forget about. The frame references how we do not very often print out the many images we take and how we seldom frame them or put them into albums. We use our phones to use Instagram and its filters and in doing so reference the past and our generational brand of nostalgia, but what will our future generations’ nostalgia look like when all of our photos are living in our phones and storage devices? (Decidedly unromantic, IMO.) In making the whole immersive environment of a darkroom simulation, I am also referencing my own nostalgia for the process of darkroom development that has been largely abandoned for a while now.

In short, I’m trying to slow down the process of our quick and dirty mobile-phone photography by having you experience a meditative darkroom process. I wanted to create a personal immersive environment where this could happen. I got some great reception at the Spring Show 2013. But you either “got it” or you didn’t, which is all OK. It took a while to pare down the concept from a full-scale personalize-able exhibition to what this iteration has become. If I were to install this in a more public space, I would make some technical tweaks and aesthetic and user-experience changes, but I’m happy with the way it turned out and the experience I am/was offering.

Some screenshots of my Max/Jitter patch and my Python script.